Over the past few weeks, digital contact tracing has entered centre stage as a crucial element in strategies for exiting COVID-19 lockdown. But in order for digital contact tracing to succeed, people need to understand what it is, how it works, and the key challenges that need to be overcome. This short blog post is one attempt to do these three things, and to provide links to other articles for more information.

Digital contact tracing is simply the name given to a new kind of application that uses people’s mobile phones to keep track of whom they’ve been in close proximity to in the recent past. It relies on the assumption that most people carry a smartphone with them, and uses this assumption to translate the problem of keeping track of people to keeping track of their smartphones instead.

One naive appoach might be to keep track of all the places a person has been, and to find others who were in the same places at the same time. But this approach poses significant privacy concerns, because you can tell a lot about people based on where they go, and how long they linger in each place. It also captures more data than necessary: note that, in order to determine that you’ve been near someone, you don’t necessarily need to know where you were when that happened.

How it Works: The DP3T/Apple-Google Approach

Instead, the approach being rolled out by Apple and Google is inspired by a technique from a multi-institution academic project called DP3T, which I have had the pleasure of having a little bit of involvement in. It consists of having phones sense and identify each other using Bluetooth Low Energy (BLE); a variation of the same Bluetooth wireless technology now commonly used to connect wireless headphones, keyboards and mice. BLE is, in some sense, ideal for this kind of application; as its name suggests, it is designed to consume little power (important for those battery-hungry phones), and is designed for low-bandwidth, “discovery” applications, like Apple iBeacons used in some retail shops and public spaces.

Here is the basic idea of how it works:

-

Smartphones start acting as beacons, announcing themselves to other smartphones nearby using BLE.

-

At the same time, they start listening for other nearby beaconing smartphones, noting down in a little “contact” diary of each smartphone it has seen.

-

When a person becomes sick, they are instructed to get a test (or self-test), and to inform a public health authority digitally through an app. This health authority, in turn, confirms this case, and relays this information to all other people’s smartphones as a confirmed infected patient.

-

Every time the health authority announces a new infected patient, their smartphone checks its private “contact diary” to see if they have any matches. If they have, this means they’ve been near a person who has declared themselves sick–implying they might be infected, and should probably self-isolate. If not, they’re assumed to be safe.

This is a simple description of the basic technique, but in practice there’s a bit more to it. One of the crucial details omitted in this description is how people’s privacy is preserved in the process. We’ll get back to this later.

Role in Reducing Disease Spread and Limitations

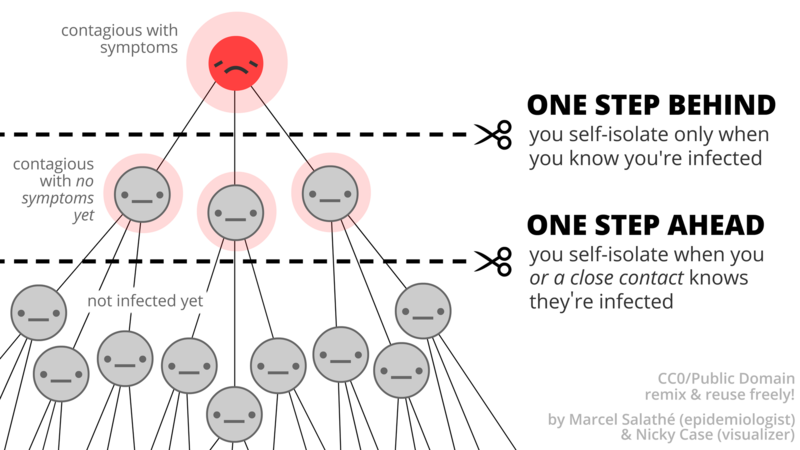

The role digital contact tracing plays in reducing infections is also relatively easy to understand. The goal is to inform people that they might be infected before they feel any symptoms. This is particularly important for COVID-19 because one of its nasty characteristics is that a person who has contracted the virus can become highly infectious several days before any symptoms appear. This means people will likely continue living their normal lives (near others) for a few crucial days where they can begin infecting others before they start to feel unwell.

Another nasty problem is that a significant number of people who get infected never end up showing any symptoms. Yet, they may be just as infectious as those who do.

If a person who is infected starts self-isolating before they get symptoms, or if we correctly inform those who are infected but don’t ever end up getting symptoms, this has the potential to drastically reduce the number of people they might unsuspectingly pass the virus on to. This translates to a direct reduction in R, the virus reproduction number often discussed in UK government briefings.

So how does digital contact tracing aim to achieve this? The goal is to keep track of ways each person may have been exposed, and to notify them as soon as any of these leads has been confirmed being ill. To do this, it assumes that all the times a person comes near other people represent “potentially contagious” events. This makes most sense because a primary mode of transmission of the virus is direct, between people, through the air, and this is most likely to happen only when two people are near each other.

Unfortunately, however, this approach is limited in a few ways. First, direct person-to-person transmission isn’t the only way one can get infected. One could easily pick up the virus from touching a contaminated surface, as demonstrated by this NHK video of a people going to a buffet restaurant. It’s also possible for a person to be infected by inhaling aerosolised droplets containing the virus lingering in the air, minutes (or even, potentially hours) after an infected person has left, particularly if they sneezed. Neither of these events is accounted for by digital contact tracing, and is among the ways it could fail to inform someone that they’ve been infected.

Another limitation is that being close to someone who’s infected doesn’t necessarily mean you were exposed to them. For instance, if two people stand on the opposite sides of a piece of wall (or piece of glass) with no air circulation between them (such as living in separate flats, or the inside/outside of a tube carriage), they could be technically near each other (and be registered as a possible exposure) without any air-transmission possibility.

An even greater limitation is that it needs enough people to use it, for the system to make any difference. If only a small percentage of a population participate in digital contact tracing, then the chances that these people will be the ones that directly infect one another becomes extraordinarily low. This diminishes the opportunities for digital contact to make a difference.

The authors of the Science magazine paper from the Oxford Big Data Institute anticipate that a minimum 60% adoption rate among the UK population would be necessary for the app to achieve its desired epidemiological effect.

This is an ambitious adoption rate for many reasons. While it may not seem inconceivable that an app commissioned and backed by a treasured institution such as the NHS would be able to achieve such a high rate in principle, it is still very challenging for several reasons. First, it’s important to put this number into perspective; while some 78% of the UK population are thought to have a Facebook account, its daily userbase is thought to be closer to only 44% of the UK population . This means that significantly more people would need to be running the contact tracing app in the UK than use the most popular social network on a daily basis.

Second, unlike the Facebook app, this kind of digital contact tracing tracing is a new technology, and this has several associated problems. First, it only supports a subset more recent smartphones that support Blueooth Low Energy. This itself is a problem and will directly impact the numbers of people who can use it, especially those in low-income or less tech-savvy segments of the population who may not be able to afford new, expesive handsets, or may not have any incentive to make frequent upgrades.

A second, significant problem is that it’s an unfamiliar technology, and a potentially privacy invasive one at that. It sounds creepy, and even with the kinds of privacy guarantees we discuss below, people might not understand what these privacy guarantees mean, or believe that they will work in practice. And people have plenty of reason to be sceptical: the number of high-profile data-privacy scandals that people have witnessed in recent years, from Cambridge Analytica and the Snowden revelations, as well as endless data breaches in the news, have reduced people’s faith in the ability of companies and governments to preserve their privacy.

Privacy in the DP3T/Google-Apple Approach

Since contact data could be seen to be very revealing, the DP3T team sought to design in strong privacy guarantees in the protocol itself, to make it very difficult for anyone (institutions or adversaries) to violate a person’s privacy using the protocol. Without such guarantees, contact tracing technologies could give companies (and governments) access to even more granular data about where people are at every moment of the day, and whom they are with, eventually granting access to understanding the entire structure of their (real life) social networks. By contrast, the DP3T/Apple-Google approach is designed to in a way it can effectively do its job, whilst letting companies (such as Apple, Google, platforms or app companies), governments (including health authorities) and malicious users (hackers) learn as little as possible.

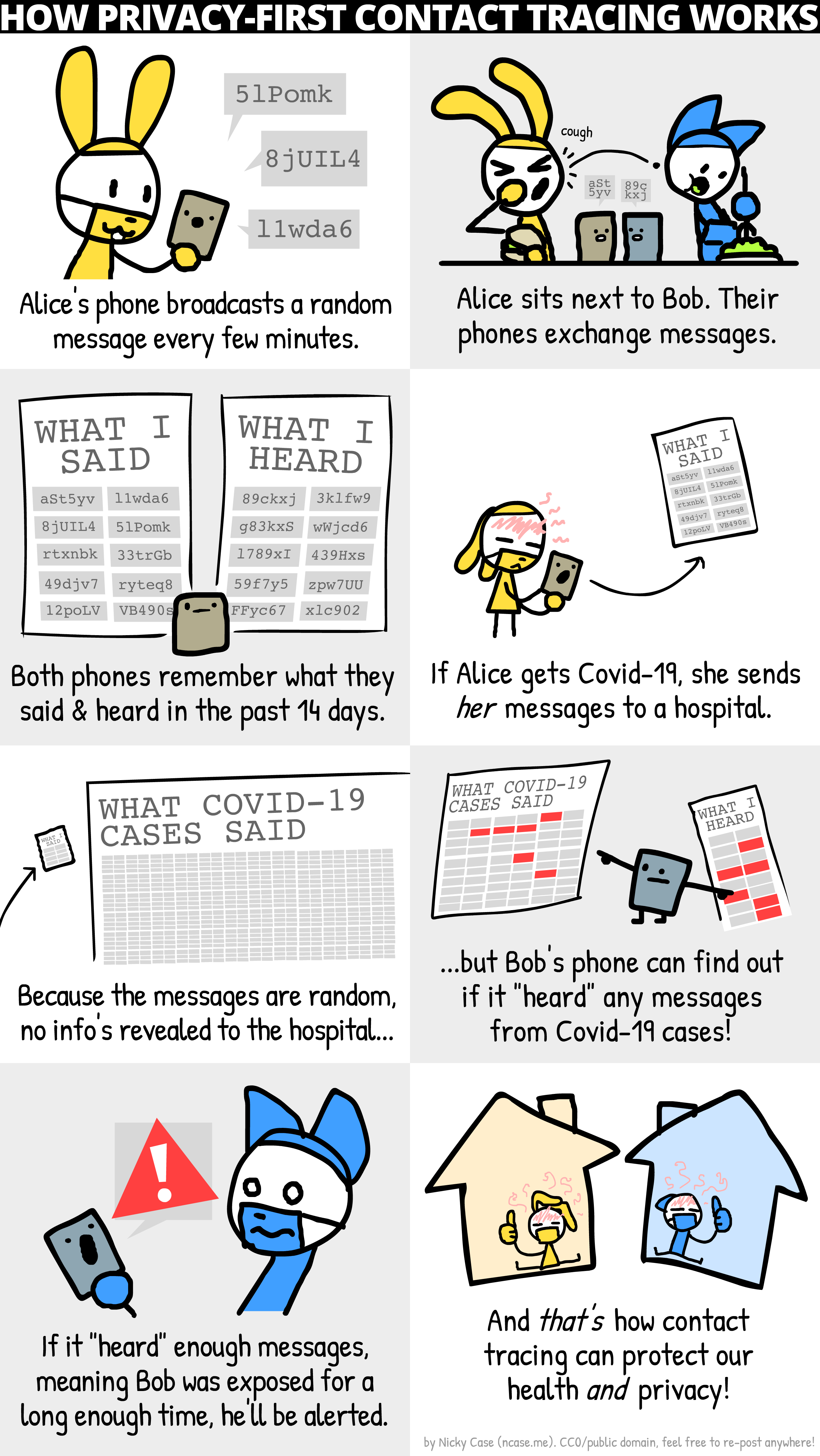

Here’s a simple description of how it works in the aforementioned 4-step scheme. At the start of every day, each smartphone plans out 96 different random “disguises”, one for every 15 minute block of the day. The smartphone “wears” each disguise when announcing itself as a beacon to nearby phones. At the end of every 15 minutes, it switches to the next disguise it created in its plan. Because all beacon announcements are made using these disguises, phones can only see each other’s disguises. The result is each phone only knows which disguises it has seen, and which it, itself, has used; it has no knowledge of how the disguises it has seen corresponds to particular people or devices in real life.

To complete this disguise scheme, when a person needs to report to a health authority that they’ve been infected, their phone hands over the list of disguises they used. Depending on how this handover is done, the health authority may (or may not) end up learning a correspondence between disguises and their identity. But regardless, it keeps this information private, and marks the disguises provided as infected which is disseminates to the public. The public only ever learns which disguses were confirmed to belong to infected people, but not who the owners were.

If this didn’t make any sense, (or even if it did), you might want to refer to this brilliant comic illustrating how it works, by Nicky Case for the DP3T team (which is also available on GitHub).

Depending on how keenly you are following, you may immediately realise that it might be possible to arrange a situation where one person could figure out that a disguise corresponds to a particular friend or other person, or more importantly that they have (or have not) been infected. Namely, one could arrange to be completely isolated with a target individual, and then check their phone to see what their diguise is. But this is not terribly useful because this disguise would last at most 15 minutes longer before it would switch to a completely different disguise; thus, your ability to “identify” the individual based on their phone’s disguise would only be limited the time window in which you were co-present with them, and a short time window (up to 15 mins) after that.

A slightly bigger privacy risk is that you could end up learning that the person was (later) infected, by watching announcements from the public health authority for new confirmed infected disguises, and determing if any of them corresponded to the disguise you saw your target use.

This later risk is currently mitigated by Apple and Google, by restricting the ability for people (via apps) from ever gaining access to the disguises either seen or used. Instead, the contact diaries are locked away in a digital vault deep within the phone subsystem, so that only the phone operating system responsible for making matches can access these records.

Privacy Not Guaranteed? The NHSX Approach

The NHSX app uses the same basic idea, but with a few significant differences. Instead of generating a selection of different “outfits” for the day, each smartphone generates only one identity per phone (called the SONAR ID), which it discloses to the NHSX servers. Since a singular disguise (and one that’s shared with someone) isn’t really much of a disguise, the metaphor breaks here; it’s easier to consider this “disguise” (identifier) a pseudonym; it’s an “name” that doesn’t directly correspond to the person’s real (offline) name, but uniquely identifies them anyway.

Since revealing the pseudonym during broadcasts could lead anyone to being able to trivially learn associations between their offline identity and their pseudonyms, the NHSX app encrypts the pseudonym (to yield something called TRANSMIT ID) before broadcast. It also periodically changes (“rotates”) this encrypted version of the pseudonym to make it difficult for users to learn associations between the encrypted pseudonym and their offline identity.

The main difference from the DP3T/Apple-Google approach discussed earlier is how much the NHSX server can learn about people. In essence, in the DP3T/Apple-Google scheme, the server at most learns the disguises of those who are infected (in some cases, it does not even need to associate these disguises with an offline identity on the server). In the NHSX app, the NHSX servers learn not just the identities of those who have reported themselves as infected, but the identities (pseudonyms) of all the others that the infected person was near, including how long, how often, and when these exposures occurred. Since the NHSX server have access to decrypt the pseudonyms, it can also track these people through others’ uploaded subsequent uploaded location histories as well. Through this keyhole, the NHSX servers have significant access to re-constructing the social graphs and activities of those who are infected and exposed.

The initial lack of clarity around the NHSX approach initially created a lot of opposition and push-back from privacy advocates. In response, the NHSX published the complete source code of its app as an act of “full disclosure”; however, it has yet to disclose the code of its server infrastructure (including proprietary algorithms) where key data handling questions remain answered. The Data Protection Impact Assessment of the NHSX App Trial on the Isle of Wight sought to clarify some of these issues, but as analysed by Michael Veale’s Analysis and as summarised by the Open Rights Group, many outstanding concerns still exist, including the interpretations of the data capture and handling practices of the app under the GDPR.

Summary

Digital Contact tracing offers exciting an new idea to address one of COVID-19’s worst charactertics: its stealthy nature. However, there are significant barriers that will need to be overcome for it to make a real difference.

Comments or corrections? Please email Max Van Kleek or contact him on Twitter (@emax).