RTI Student Network

Join our international organisation for graduate students committed to making future technologies more responsible

The Responsible Technology Institute (RTI) has started a new initiative to connect graduate students from all disciplines and nations interested in topics related to responsible research and innovation. We will also hold monthly reading groups and facilitate work-in-progress seminars for research students.

By joining the RTI Student Network, you will:

- connect with other students who share your interest in responsible innovation

- deepen/broaden your knowledge in related topics through discussions and presentations

- receive supportive feedback from fellow students on on-going research projects

- have the opportunity to shape an emerging global network

If you would like to become a member, please reach out to Tyler Reinmund (tyler.reinmund.20@ucl.ac.uk) and Lize Alberts (lize.alberts@cs.ox.ac.uk) and include your name, university, year of study (e.g. Master’s, PhD), and research interest(s).

Thanks and we look forward to hearing from you!

Consent for embedding YouTube videos

Respecting users' privacy choices

TLDR: Embed YouTube videos, whilst respecting users’ privacy with our plugin for your website.

Working on the website of our new website, we wondered how to embed YouTube videos in way that reduces data sharing with Google, and respects users’ data protection preferences.

EU data protection legislation and similar regimes set high requirements for sharing data with third-parties, such as YouTube. Asking for user consent is usually the most straightforward way to establish a legal basis for such data sharing.

Usage

All you need to use our tool, is to integrate the CSS and JavaScript from the GitHub into your website. Then, you can embed any YouTube video with the following code:

<div class="video_wrapper" style="background-image: url('');">

<div class="video_trigger" data-source="">

<p class="text-center">Playing this video requires sharing information with YouTube.<br><a target="_blank" href="https://tosdr.org/#youtube">More information</a></p>

<input type="button" class="video-btn" value="Agree" />

</div>

<div class="video_layer"><iframe src="" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe></div>

</div>

Note: The compliance with law has not been checked by lawyers. No liability can be taken beyond the minimum legal requirements. Use at own risk.

gdpr4devs.com

Guide to GDPR for App Developers

TLDR: We created a concise overview of data protection obligations for app developers at gdpr4devs.com.

App developers face an overwhelming number of obligations when it comes to data protection. Not only do individual countries have comprehensive data protection regimes, but also the app store providers themselves ipose additional requirements.

Konrad’s MSc thesis in Computer Science, supervised by Max van Kleek, analysed a large range of documents, that app developers must consider for data protection in the European Union. The analysis of these guidelines resulted in a set of concise developer guidelines.

We shared these guidelines at gdpr4devs.com, in the hope that some app developers find them useful. Instead of providing a lengthly legal document, these guidelines represent the personal view of an app developer. They are by no means exhaustive, complete, proven in court, nor replace formal legal advice.

ARETHA

An Ethical, Respectful, and Privacy-Preserving Smart Assistant for the Home

Virtual assistants, such as Amazon’s Alexa, the Google Home, and Apple’s Siri are now being used by millions of users, every day. As they have become more sophisticated, people have begun using them for many kinds of things beyond checking the weather: shopping, scheduling, time keeping, among others.

As these devices become more central to people’s lives, they start to get privileged access to many private aspects of people’s lives: not only access to their private spaces, such as what is said at home or in the bedroom, but also the kinds of things people do and buy. Colluding with smartphone apps, such devices will soon be able to infer your emotional and physical state, your plans and intents, your income, your conditions, and your personal activities.

How do we know these virtual assistants are genuinely serving their users with their best interests in mind? How do we also know what the companies behind these devices will do with your data?

As we have written previously about “respectful AI”, this is not just about privacy, although privacy is an important element. This is about whether such assistants genuinely serve you with your best interests in mind. Will a large online retailer, such as Amazon, always design its virtual assistant to get you only the things you need via the best means of getting them–or might they, for example, design their virtual assistant to make them more money, such as making you want more things?

We think that, in the future, the growing importance of virtual assistants will underscore the need for neutral virtual assistants for and by the people. By “by the people”, we mean non-retailers, non-advertising companies, but free an open source developers who genuinely are neutral and have users’ best interests in mind.

Towards that end, we have taken the first step with ARETHA (* Artificial Respect-Enabled Transparent Home Assistant) project a FOSS Virtual Assistant platform for experimenting with respectful behaviours using DIY hardware for a smarter home.

The prototype is under development and not ready for public use. Check out the GitHub if you’d like to contribute!

Playing this video requires sharing information with YouTube.

More information

X-Ray Refine

Supporting the Exploration and Refinement of Information Exposure Resulting from Smartphone Apps

Most smartphone apps collect and share information with various first and third parties; yet, such data collection practices remain largely unbeknownst to, and outside the control of, end-users.

In this paper, we seek to understand the potential for tools to help people refine their exposure to third parties, resulting from their app usage. We designed an interactive, focus-plus-context display called X-Ray Refine (Refine) that uses models of over 1 million Android apps to visualise a person’s exposure profile based on their durations of app use. To support exploration of mitigation strategies, Refine can simulate actions such as app usage reduction, removal, and substitution.

A lab study of Refine found participants achieved a high-level understanding of their exposure, and identified data collection behaviours that violated both their expectations and privacy preferences. Participants also devised bespoke strategies to achieve privacy goals, identifying the key barriers to achieving them.

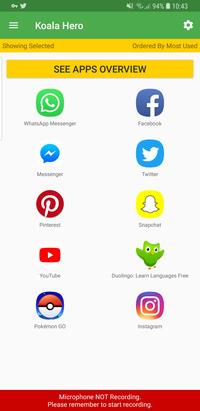

TrackerControl

A privacy tool for Android that can reduce tracking and ads

We released the first protoype of TrackerControl for Android.

TrackerControl allows users to monitor and control the widespread, ongoing, hidden data collection in mobile apps about user behaviour (‘tracking’).

![]()

To visualise this tracking, a compehensive database of tracker companies from the X-Ray project, developed by Professor Max Van Kleek (University of Oxford) and others, is used to reveal the companies behind tracking to users and to allow users to block tracking selectively.

The app further aims to educate users about their legal rights under current EU Data Protection Law (i.e. GDPR and the ePrivacy Directive)

Under the hood, TrackerControl uses Android’s VPN functionality, to analyse apps’ network communications locally on the Android device. This is accomplished through a local VPN server, through which all network communications are passed, to enable the analysis by TrackerControl. In other words, no external VPN server is used, and hence no network data leaves the user’s device for the purposes of tracker analysis.

![]()

TrackerControl was developed by Konrad Kollnig, as part of his MSc thesis in Computer Science at the University of Oxford, under the supervision of Professor Max Van Kleek.

Overall, TrackerControl aims to inform, empower, and educate users with regards to tracking in apps, that is, collection of data about user behaviour.

KOALA

Kids Online Anonymity and Lifelong Autonomy

KOALA (Kids Online Anonymity & Lifelong Autonomy) is an impact acceleration project funded by Oxford University’s EPSRC Impact Acceleration Account, partnering with Anna Freud National Centre for Children and Families. The general goal of the KOALA project is to investigate the impact of personal data collection practices of mobile apps upon the general well-being of young children aged 6-10.

The background for the project is that tablet computers are becoming the primary means for young children to go online. However, few studies have examined how young children under 11 perceive and cope with personal data privacy during their interactions with these mobile technologies. Research in our current EPSRC SOCIAM project has revealed that a large amount of personal information is tracked while young children are interacting with tablet computers. Although the impact of these technologies upon the well-being of adults or teenagers are better understood, we know little about their impact upon young children.

The main objective of KOALA is thus to work with mental health researchers from AF, parents and educators of young children, as well as young children themselves, to better understand the impact of personal data collection by mobile apps upon the well-being and health of young children.